<

<15

DecemberFast Track Apache Sparks

Description

Fast Track Apache Sparks

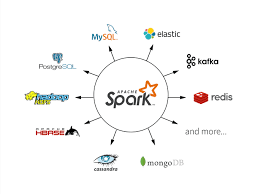

Spark Architecture or Apache Spark is a parallel processing frameworks that supports in-memory processing to boost the performance of big-data analytical application and the Apache Spark core engine of which its resource are managed by YARN. In simple word, Spark Architecture is known for its speed and efficiency. The key features it possesses are:

- It processe data faster which save time and cost in reading and writing operation.

- It allows real-time streaming that makes it really used technology in today’s big data world.

Spark Architecture is based on two important abstractions i.e Resilient Distributed Dataset(RDD) and Directed Acyclic Graph(DAG).

Fast data processing. In-memory processing makes Spark faster than Hadoop MapReduce – up to 100 times for data in RAM and up to 10 times for data in Spark Streaming Framework. Even though, there are different input sources like file, databases and various end-points, the interesting development in the current setup is how efficiently we can use Apache Kafka with the Spark Streaming Platform. In addition to the default receiver-based approach, there has been an inclusion of a "direct" technique where the performance and duplication issues have been resolved.In addition to the performance efficiency, we also need a simple approach to maintain the distribution technique in our complex systems. It also requires the accuracy of 99.9999% which lays a tremendous need for us to handle failure scenarios. This "direct" technique also reduces the complexity of handling those failures and maintain less number for replicated data across the system.

We need to create both trained models and test data in a streaming manner. We have tried various approaches to update the model when new data points stream in, and found hierarchical models were much easier to perform the incremental model updates. These hierarchical data models can be easily deployed using the Spark Streaming framework as it internally supports micro-batch processing for these kinds of model preparation. We also understood that with the flexibility and pure streaming nature of Apache Flink, the implementation of reinforcement learning can be easily realized and the performance metrics for these implementations are quite competitive compared to the other frameworks.

Spark vs MapReduce: Performance

Apache Spark processes data in random access memory (RAM), while Hadoop MapReduce persists data back to the disk after a map or reduce action. In theory, then, Spark should outperform Hadoop MapReduce. Nonetheless, Spark needs a lot of memory. Much like standard databases, Spark loads a process into memory and keeps it there until further notice for the sake of caching. If you run Spark on Hadoop YARN with other resource-demanding services, or if the data is too big to fit entirely into memory, then Spark could suffer major performance degradations.MapReduce kills its processes as soon as a job is done, so it can easily run alongside other services with minor performance differences.Spark has the upper hand for iterative computations that need to pass over the same data many times.

The effectiveness of a big data application majorly depends on its performance. Caching in Apache Spark provides a 10x to 100x improvement in the performance of big data applications through disk persistence capabilities.