<

<31

OctoberCaching Overview

Description

CACHING OVERVIEW

Click Here To Know More About Caching Usages

Caching is the process of storing file or text copy in a cache server for quick access. While a cache is any temporary storage location for data or file, the term is commonly used when referring to internet technology.DNS servers are used to cache DNS records for easier lookup access, CDN server cache content for reduced latency while web browsers cache JavaScript, HTML file, and image to ensure websites can load faster.A cache is a component that stores data so future requests for that data can be served faster. This provides high throughput and low-latency access to commonly used application data, by storing the data in memory.By avoiding the high latency data access of a persistent data store, caching can dramatically improve application responsiveness, if it is well used.

Generally, the data in a cache is stored in fast access hardware like RAM (Random Access Memory). It can also be used in correlation with software components.The primary purpose of a cache is to enhance data retrieval speed by removing the need to access underlying storage layers, which can makethe process slower.By trading capacity for speed, cache transiently stores a subset of data instead of a whole database of complete data.Because of the high request rates of Input/output operations per second (IOPS)supported by in-memory engines and RAM, caching reduces cost on scale by improving data retrieval.

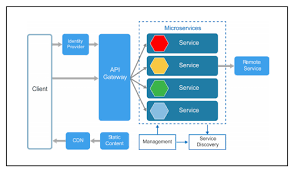

An organization needs to invest in additional resources to achieve the same retrieval speed using traditional disc-based hardware and databases. This would drive up the cost, and it would still be challenging to achieve the latency performance offered by in-memory caching.Caching can be applied and leveraged through different technology levels, including in operating systems, networking layers like CDN (Content Delivery Networks) and DNS, databases, and web applications.Caching can be used to reduce latency and improve IOPS for most read-heavy applications like Q&A portals, media sharing sites, gaming sites, and even social networking sites.Cached information can include database queries results, API requests or responses, web artifacts like image files, HTML and JavaScript as well as intensive calculations.For any of these applications, large data sets require to be accessed in real-time across various machines spanning hundreds of nodes to make a search possible. Caching makes it possible to do this in real-time without

delays on the website platform.

Understanding The Different Types of Caching:

Database Caching:

Your database’s speed and throughput performance is an impactful factor that determines your application’s overall performance.

Database caching makes it possible for you to increase the throughput by lowering latency in data retrieval when it comes to backend databases.This leads to an improvementin the performance of applications. In this case, cache acts as an adjacent layer for data access to your database that your applications can use to improve performance. You can apply a database cache in any type of database, including NoSQL and relational databases. Common methods that can be used to load data to a cache include write-through methods and lazy loading.

General Cache:

For use-cases that do not need disk-based durability or transactional data support, using in-memory key-value data storage as a standalone database is an effective way of building high performance applications.Apart from improved speeds, applications also record improved throughput at a lower price point. General cache can be used for referenceable data like category listings, product grouping and profile information.

CDN Caching:

A content delivery network or a CDN is a network that caches web content like videos, images or webpages in proxy servers located close to the origin server being used by web users. Because of the proximity of the proxy server to the user making the requests, a content delivery network delivers the content requested faster.To make it easier to understand, you can consider CDNs as a chain of food stores. Instead of making the trip to where the food is grown originally, you only need to visit a local food store to get the food. This will take you minutes as opposed to days or hours should you have gone to the farm. In this way, CDN caches stock web page information to allow easier and faster loading of web pages.

How content is cached?

When a user requests content on a website that uses a CDN, the content delivery network locates the content from a server. It saves a copy of the content in the CDN cache server for future requests. This data will remain in the CDN cache and made available whenever it is requested.CDN caching servers are located in data centers in different parts of the world. This ensures the servers are in proximity to users in need of accessing content. The closer a server is to a user, the faster the content retrieval process.

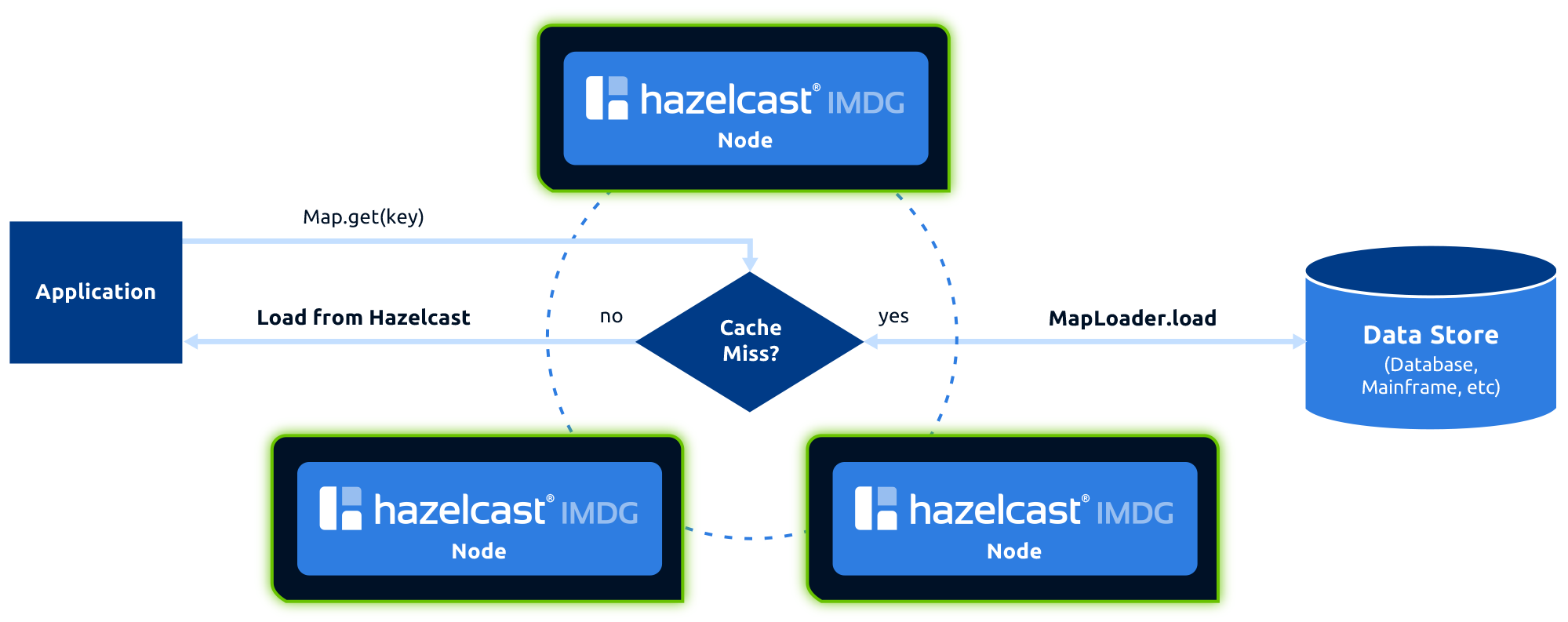

Caching puts actively used data in memory, where it can be accessed significantly more quickly. While this sounds simple, it can become very complex, as real-world systems are wildly diverse and constantly changing. Through meticulous engineering, deep caching expertise, and a focused commitment to customer needs, Hazelcast handles that diversity with a robust in-memory computing platform, delivering the benefits of distributed caching where high-speed, high-volume systems need it most.

Scalability:

Hazelcast in-memory caching is designed to scale quickly and smoothly. And nodes cluster automatically, without production interruptions. A multitude of scaling options are offered, including High-Density Memory Store for large-scale enterprise use cases. Naturally, the Hazelcast In-Memory Computing Platform is cloud-ready, and elastic scaling makes it a perfect fit.

Manageability:

Click Here To Know More About Caching

The Hazelcast In-Memory Computing Platform puts the power of cache management within reach and keeps the total cost of ownership low by focusing on ease of development, implementation, and operation. The platform is surprisingly simple to set up and configure, offering remarkable resilience and high availability.

On Demand / Cache Aside

The application tries to retrieve data from cache, and when there's a "miss" (cache doesn't have the data), the application is responsible for storing the data in the cache so that it will be available the next time.The next time the application tries to get the same data, it will find what it's looking for in the cache. To prevent fetching cached data that has changed in the database, the application invalidates the cache data while making changes to the data store, through specific logic in an Action.Another possibility is to update the cached stale data with the new data as part of the Action logic instead of invalidating the cache entry. In a situation where a continuous query is made to a set of Entities (read-only) that expose CRUD Actions it might be wiser to implement the cache access (reads and writes) inside the exposed CRUD operations, making it transparent for the consumers of those Entities.